Hi, Kevin here.

Thinking critically, questioning what we know and how we know it helps shape and improve what we’re doing, how we’re doing it, and eventually what we believe in. I wish this piece of critique to sparks a sane debate about our knowledge, arguments, and beliefs as designers.

The Design Sprint approach is quite trendy and aims to answer any teams, companies, and organizations’ challenges (page 26 of “Sprint”). Many are willing to try it, often as a way to kickstart “a design mindset” within their organizations. I personally know some pretty active Sprint Masters in Europe and I tried the approach a few times, both as a participant and a facilitator. I’ve been thinking about this approach since it gets noticed with Jake Knapp’s book “Sprint”, and I have to say that I’m still dubitative.

“Focusing on the surface allows you to move fast and answer big questions before you commit to execution, which is why any challenge, no matter how large, can benefit from a sprint.” — Jake Knapp, Sprint (p. 28).

What triggered this article is the many articles, talks, and workshops about it these last few weeks and some discussions with other professionals. First, let me clarify something: a design approach gaining in popularity can probably be seen as a “good thing” to raise awareness about design practices within companies –no problem with that. The critics here are really about the approach: its highly codified process, its methodological issues, its intrinsic “premise”, and the different arguments used by the community.

General Lack Of Proper Research

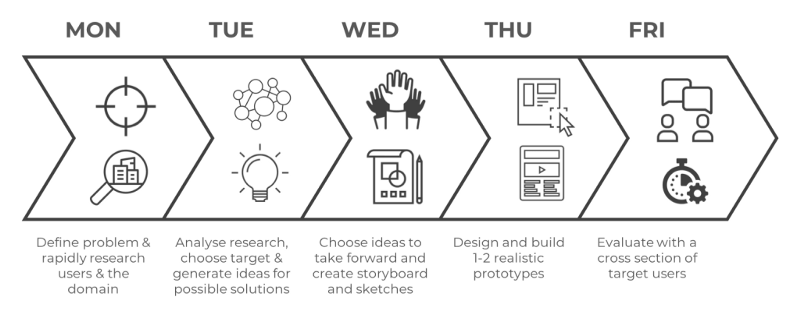

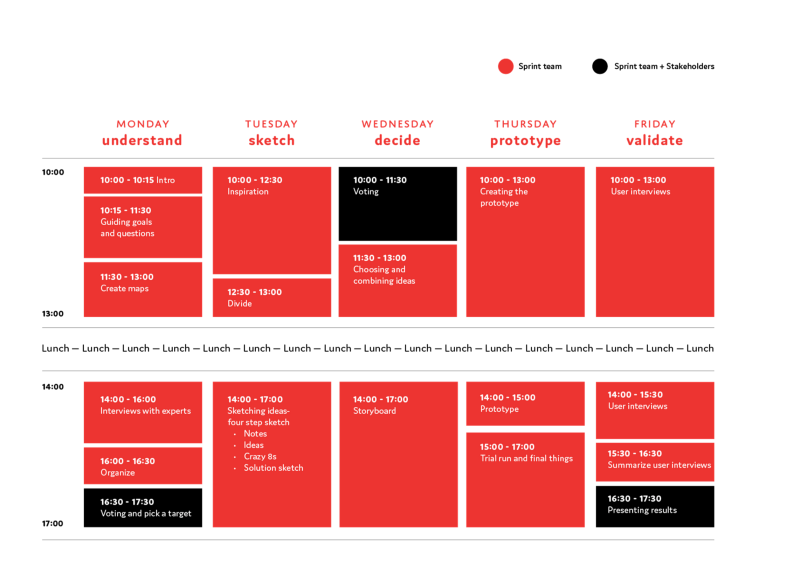

This is probably the most obvious issue when you start applying the Design Sprint: the approach doesn’t specifically incite teams to do research. Worse, the illusion that you can nail down weeks of user research in one single day is so rooted in the approach that the belief that user research is something more than optional easily permeates through teams.

Because of that, teams start building heavily on assumptions, which is by definition not user-centered. Yet, the approach gives the illusion that it is –thanks to the implication of “experts”. I’m not saying it’s not a good thing to have “experts” or “user representatives” participating in the design process, on the contrary, but solely relying on them without prior field research is a mistake. This contradicts some basic user research principles, and, well, most UX literature and User-Centered Design (UCD) standards (see here, here, and here), i.e. the basic difference between what people say, think, do. It’s without saying all the obvious bias of users participating in the workshops (see confirmation bias). Again, that’s why we want to mitigate with field data.

The main counter-argument I heard so far on that particular point is that the real users are involved during the last day, when you test your solution with users through user testing sessions.

Okay but we can easily see the bias here: you give people a solution that has been devised from assumptions. By doing so, you create a precedent, meaning that your solution becomes the reference, therefore framing any further discussion with them. This puts your solution at risk, by potentially hiding major flaws in your assumptions and/or misalignments with users’ needs and context. If you seek validation only, you just created the perfect conditions to get what you want 👉 see confirmation bias, observer-expectancy effect, and the law of the instrument.

That’s the reason why prior user research is so important: starting from observable facts, gathering insights from the field, and devising measurable criteria to reduce bias and focus on actual needs, contexts, pains, etc.

Rigid Systematic Approach

The Design Sprint approach is a highly codified step-by-step process, and I suppose this is what makes it so popular. Whatever the problem you’re trying to solve, one simple and clear answer. And the best of it: you always know what you’ll get at the end. That’s a gold mine for consultancy firms and their clients that likes to have simple answers to complex problems. But a rigid systematic approach is contextless by definition.

“If all you have is a hammer, everything looks like a nail” — Abraham Maslow, 1996

In research (in general, not just UX), we try very hard to design our research to make sure to measure the right things to answer our hypothesis (and counterhypothesis). We do so by selecting the right methods to measure the right values, learn the right insights, or produce the right outcomes. “Right” here means that whatever the method, it is aligned towards what’s expected to be learned/understood. And this is critical to validate or invalidate a hypothesis. This implies your hypothesis is not only testable but also falsifiable.

The Design Sprint, on the other hand, does not care much about either adaptability and falsifiability: whatever your hypothesis, whatever the context, you have to use always the same methods, in a specific order.

It’s written in The Book. It’s preached by its proponents. It’s irrefutable and absolute.

For instance, let’s take the user testing method: it’s really great to uncover usability issues. But is it ALWAYS the right method? Probably not.

To give you an example, let’s say you’re a startup. Chances are your goal is to know as soon as possible if people are willing to buy your product. So, you decide to do a design sprint to quickly devise a solution, and you end the week with your testing sessions and everything goes well. What does this tell about your freshly devised solution?

You actually proved that your solution is usable — well because this is what user tests measure. What does it tell us about people willingness to buy it? Not many things, because that’s not the purpose of this method.

Testing solutions ≠ User testing

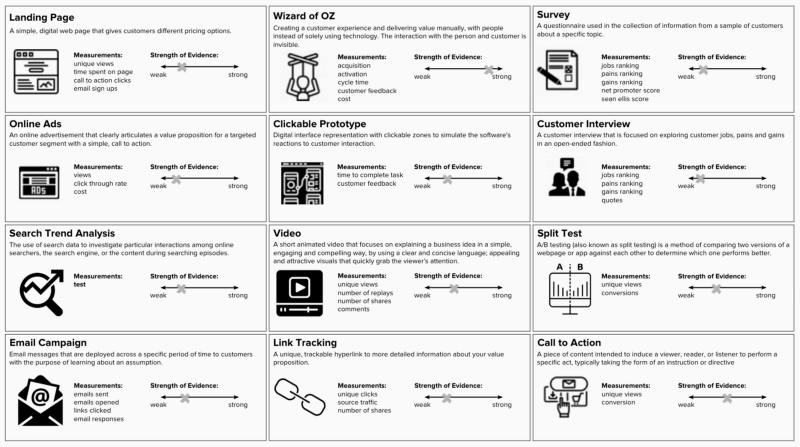

Adapting your process and picking the right methods is the job of a researcher and when you’re testing a hypothesis (i.e. business models, products, services) you’re in a research mode. But while user testing is part of the methods for testing solutions, testing solutions don’t necessarily equal user testing. It depends on what we want to know/learn.

By the way, here is a great list of experiments to test business hypothesizes.

So, having the ability to adapt your process and pick the right methods is key to de-risk ideas and validate/invalidate hypothesizes.

Rhetorical Speeches And Faulty Reasoning

A recent interview (in English) of a French Sprint Master contains most of the recurring rhetorical arguments I hear from most Sprint’s facilitators (see my comments here). Let’s point out that they don’t answer their main question “Design Sprints allow to innovate faster. But are they customer-centric?”, and that most answers are dogged with misleading comparison or ready-to-use catchy phrases. That’s not the interviewee’s fault tho, but more an issue with a shared library of rhetorical arguments in the community.

“[So] day 1 is enough to devise the problem to solve and the users’ needs and context etc, except when things are not clear — then you probably need user research?The issue here is for a company/team to have the ability to spot the nuance between actually having enough insights, data, and information to begin a sprint VS believing that it the case.

Either way, to have the proper criteria to devise a solution that fits real needs in real contexts, this implies the team did do user research beforehand. Otherwise, it is not user-centered (which is exactly the problem with Design Sprints and the point of the question).

So in brief, if you don’t do research that’s because you already did it, else do research… Yet, how many teams start design sprints with nothing else than their beliefs 🤔

Anyway, that’s kind of a flawed circular reasoning.” — source

In “The Top 10 Misconceptions about Design Sprints” (2017), Tim Höfer replies to the criticisms against the Design Sprint. But, here again, most of them are diluted in a misleading mix of rhetorical arguments.

We can find the same circular reasoning about user research, in a more nuanced way.

“It’s a common misconception that in a Design Sprint, you skip research and jump straight to generating solutions. If that was the case, it would be pretty bad, but it’s just not true. The start of every Design Sprint is dedicated to learning about problem by interviewing experts and Sprint participants from the client side. What is true, however, is that long, upfront ‘Design Research’ phases are not a part of the Design Sprint (which doesn’t mean that existing data will be ignored when a Sprint kicks off).”

Some sophisms.

“Waiting for the results of in-depth design research is a perfect excuse for procrastinating on executing on solutions and testing them in reality.”

This is interesting. These kinds of pseudo-arguments seem irrefutable because they are purely rhetorical –it’s catchy but doesn’t prove anything or provide any meaningful information to advance further the debate. Funnily, the argument can easily be reversed and still works, which demonstrates its total vacuity:

“Executing on solutions and testing them is a perfect excuse for procrastinating on in-depth design research”

Some fallacies.

“My go-to example for this is Nokia: World-class designers and researchers spent years traveling the globe to find out how people all over Asia and the African continent use their cellphones. The idea: Gain insights how people live, so Nokia can build products that people would buy.Really fascinating stuff, but this treasure-trove of research still left Nokia unable to defend their market share against the iPhone (which, by the way, was considered a product “real consumers don’t want”). Nokia, an industry juggernaut and defining brand during my youth, on par with Nintendo, Nike and Apple collapsed in a shockingly short amount of time because their products became irrelevant.”

So Nokia failure as a company is enough to say that a specific practice used there is irrelevant? hum…🤔 Fine, I’m waiting for a company using Design Sprints to fail then 😈. Joke aside, this seems pretty convenient that it demonstrates specifically the inefficiency of user research… (what about Management? Technologies? Agile? Etc.)

No seriously, Tim Höfer, this is faulty reasoning. Where’s the causality or even the correlation? Are there no other more parsimonious explanations? Or maybe you possess evidence others don’t have? That’s probable, after all.

What’s also highly probable is that there are multiple variables to consider, well before user research 👉 see here, here and here. In complex situations, things are rarely monocausal.

Last year, Invision published an online book called “Enterprise Design Sprints” (2018) on DesignBetter.co. Again, we can find some biased reasoning.

“The design sprint has become a trusted format for problem-solving at many large companies, but there’s still concern amongst some enterprise organizations that it’s not appropriate for their needs. The evidence is mounting to the contrary as massive organizations, public enterprises and government agencies rack up successes using sprints to overcome design and product roadblocks.” — Richard Banfield, Enterprise Design Sprints

Here we’re talking about “mounting evidence”, but as often nothing more to show. Can we see them? Unfortunately, some biased articles from people’s subjective experiences –praising their success through any kind of process– are not enough. At best we could say these are interesting “clues”, subject to caution because of two main issues:

- Cherry-picking bias: is the “act of pointing to individual cases or data that seem to confirm a particular position while ignoring a significant portion of related cases or data that may contradict that position”. Well, I’m not saying that this what Sprint proponents are doing intentionally, but currently we have not much data to support the claimed effect.

- Survivorship bias: is the “logical error of concentrating on the people or things that made it past some selection process and overlooking those that did not, typically because of their lack of visibility”. After all, we only hear about successful stories through what one would point out a “polished communication”, but what about all the failures we have never heard of? The absence of evidence is not evidence of the absence.

“The evidence is mounting to the contrary as massive organizations, public enterprises and government agencies rack up successes using sprints to overcome design and product roadblocks.”

Please, define “success” because the definition seems to be conveniently vague, especially when later you say 👇

“[There] is little evidence that evidence from a design sprint also confirms a product-market fit. Unless you are also testing pricing and benchmarking competitive offers, you’ll find it very difficult to know if your validated prototype is something people will pay for or switch over to from a competitive option.”

We can also find what is now a recurring issue with Sprint proponents reasoning about user research. So in “What design sprints are good for” section we can read:

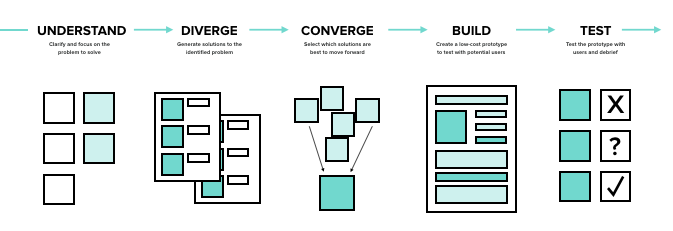

“In the Understand phase, the first of the five phases, design sprints are about building a case around what your user’s pain might be. It’s important to note that traditional research alone won’t always give decision makers the insights they need. In traditional research approaches, there’s a risk that organizations will value data that supports an existing solution over contradictory data. Design sprints are very useful in aligning potential solutions to user pains. However, it’s important to note that feasibility and usability (two other important product characteristics) are not the domain of the design sprint.”

And later, we can read this in the “What a design sprint can’t do” section:

“When planning a new project initiative or innovation, it’s best to already have research about what problems are worth solving (see chapter 1). Although the exercises in a design sprint help reveal a customer pain point and potential solution, they’re not ideal for establishing whether a market exists for that, or any, solution. Fundamental research is necessary for enterprises to discover opportunities that can then be validated with a design sprint. Don’t skip the research. Solutions without markets are destined to fail.”

Irrefutability And Magical Thinking

Last but not least: there is a sort of irrefutability in the shared speeches among Sprint proponents.

Irrefutability here can be seen when, for instance, as soon as we criticize the approach its proponents argue that this is because we misunderstood it, without the need to provide any arguments to the critics –which is fallacious.

Irrefutability also in the circular thinking about user research (as we saw it). Worse: it even spreads misinformation about what is user research by depicting a false-representation of it, just to be able to say “see, this is non-sense! Our approach is better!” –it’s called the “straw man” tactic.

Let’s take this video for instance, where Jake Knapp and Jonathan Courtney (AJ&Smart CEO) talk about user research.

What they are saying here is symptomatic of this shared circular reasoning. From their own words: (starting at 1:35) because people have to “believe” in user research to be able to perform it, and that this is “the most difficult part”, then it’s better to do a Design Sprint which is a “Trojan horse for research” because “at the end of the week, even a team totally averse to research will be hooked to learning from the users”.

So what Jake is telling us here is that because it’s hard to convince to understand the users first, then build something from this understanding, and test it to learn — basically, the design thinking approach — it’s better to skip the first stage and go directly to build → learn. And from here comes the magical thinking (starting at 2:29):

“There’s a sort of bigger question about ‘Where good ideas come from? Where do good businesses come from?’.Do they come from doing exhaustive customer research, talking to people, and discovering what their needs are and empathizing with them or whatever? Or do they come from the insights that people in the business have, the way the folks on the team are thinking about the problem?

I want to say they come from doing research but I think quite often they actually come from people just having insights.”

Well, that’s interesting. If good ideas come from people having insights, then where do these insights come from? How do they get those insights? And if it’s not by talking with users, understanding their needs, well, are they not just beliefs?

Then, Jake, tell me how do you make the difference between someone who believes having an insight and someone who actually has one? We’re still stuck in the loop.

People has “actual insights” magically popping out of their mind. Research rarely leads to innovative products or businesses. Hum… 🤔

How does Jake able to give such definitive and absolute certainty about the ineffectiveness of research? His own experience? Others Sprints proponents’ own experiences and beliefs? Sorry, but we need more reliable sources to prevent bias, such as confirmation bias or (again) survivorship bias.

Finally, is the question really about “where good ideas come from”? If you’re a company willing to consistently create great businesses, products, etc., you probably want to know what makes a good idea… a good idea. How do we define “good”? What are the criteria? Anyway, if you master this understanding you basically master your ability to produce good ideas consistently over time. And this is exactly what user research allows you to do and this is why UX maturity is a thing: your users' needs, contexts, pains, gains, etc. are such criteria for “good” ideas.

The fallacy of opposing business/products needs of good/innovative ideas to the understanding of users is the real misconception because businesses and products should be aligned with users' reality: the innovation lies in the “how” you actually do that, which requires you to understand first. The problem here is that we try to apply a product-oriented approach to any level of complexity, whereas it mainly fits well in a “known knowns” and sometimes in a “known unknowns” domain).

Conclusion

Most of the arguments used by Sprint proponents to counter the main criticisms about the approach are either weak or purely rhetorical. There are no data or actual facts available currently to prove or disprove any of the brought arguments and claimed effects.

This doesn’t mean we should not use design sprint at all. But being aware of the limitations and weaknesses of the approach is the right way to know when to use and for what purpose. We should dissociate the false promises and weak arguments from what objectively works. Design sprint seems undoubtedly a great method to move quickly in the materialization of ideas, and aligning people around it. However, it fails to provide a proper understanding of the users and is a non-flexible way to go to experiment.

On a much higher level, the design sprint seems to mainly satisfy the necessity for teams to show that they are producing outputs quickly more than anything else, which satisfies managers’ constant pressure to show results. This is what makes it attractive and probably easier to sell. But this is problematic because this approach also spread false-beliefs about user research and, well, most of the design process.

Note

To be honest, I wanted first do share a quick debate I had recently about the design sprint. But, after some research on the topic for the purpose of this article, I realized how deeply fallacious the speeches on the matter were. Because there is a lot to say and that some elements came to grow the length of the article organically, I apologize for the somehow messy structure.

Thanks for reading!

This article was first published on Design & Critical Thinking.

Discussion